Displaying present location in the site.

ICT Global Trend Part7-3

Ethical AI Guidelines and Principle of Transparency (1/2)

This article is written by Mr.Yusuke Koizumi, Chief Fellow, Institute for International Socio-Economic Studies(NEC Group)

Ethical principles to prevent harm and negative impact of AI

It is expected that AI (Artificial Intelligence) provides great benefits to individuals, companies and society. On the other hand, however, there is concern about the risk of causing new harm and negative impact on individuals and society.

Domestic and foreign governments have already issued or announced guidelines on basic principles for AI development and utilization. In Japan, the Conference toward AI Network Society under Ministry of Internal Affairs and Communications announced in June 2019 the draft AI Utilization Guidelines on the utilization of AI (Artificial Intelligence) and the Conference on Social Principles for Human-Centric AI under Cabinet Secretariat announced in March 2019 the Social Principles for AI. In foreign countries, for example, the High-Level Expert Group (HLEG) on AI set up by the European Commission released in April 2019 the Ethics Guidelines for Trustworthy AI and also in UK the Select Committee on AI of the House of Lords published in April 2018 a report including five overarching principles for an AI Code. In USA, AI guidelines have been drafted and issued under the leadership of private industry. The well-known example of the announcement is Asilomar AI Principles released in 2017. OECD (Organisation for Economic Co-operation and Development) also adopted OECD Principles on AI in May 2019. In the G20 Ministerial Meeting on Trade and Digital Economy held in Ibaraki-Tsukuba in June 2019, the ministerial statement including the same principles with those of OECD principles was also adopted.

In the AI ethics guidelines of the European Commission’ HLEG, it is stressed that AI should be human-centric, should respect fundamental human rights, and should be trustworthy in order that AI can work for enhancing human wellbeing and common good. In order to realize trustworthy AI, the European Commission’s HLEG presented a framework with five components namely (1) fundamental rights, (2) ethical principles, (3) requirements for Trustworthy AI, (4) technical and non-technical methods to realize Trustworthy AI, and (5) assessment list for Trustworthy AI.

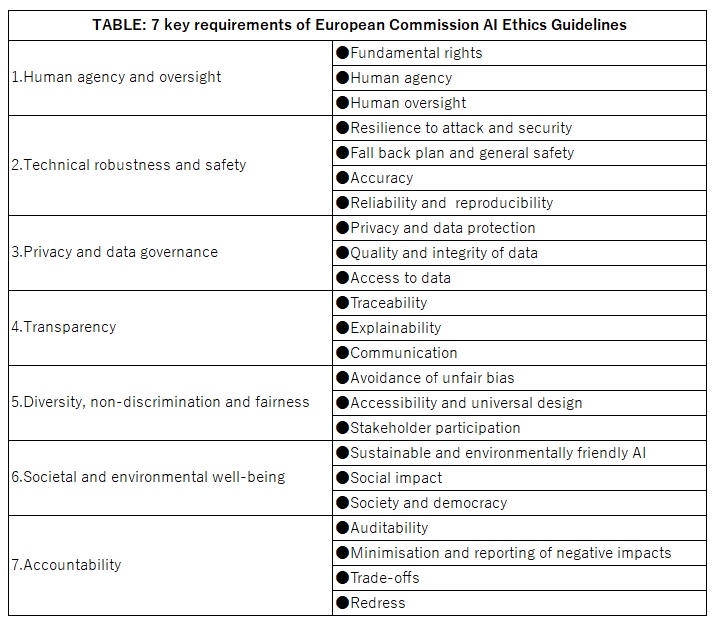

Item (2) ethical principles as quoted above consists of 4 principles, namely “respect for human autonomy” “prevention of harm” “fairness” and “explicability”. Item (3) requirements for Trustworthy AI as quoted above are covering 7 requirements for implementing Trustworthy AI building on the ethical principles, as separately detailed in the table shown below in this report. Item (4) technical and non-technical methods are also proposed to evaluate and address these 7 requirements. Technical methods include “ethics by design”, “explainable AI (XAI)” and others. Non-technical methods include “regulation”, “codes of conduct”, “standardization”, “certification” and others. Item (5) assessment list for Trustworthy AI is the pilot version of check list how to evaluate and assess the compliance to these 7 key requirements.

In the principle of “explicability” closely related with the theme of this report, transparency of AI, the HLEG guidelines say, “an explanation as to why a model has generated a particular output or decision (and what combination of input factors contributed to that) is not always possible. These cases are referred to as ‘black box’ algorithms and require special attention. In those circumstances, other explicability measures (e.g. traceability, auditability and transparent communication on system capabilities) may be required”.

In the next report the transparency of AI will be explained.