Displaying present location in the site.

ICT Global Trend Part6-7

Privacy Issues with AI (1/2)Risks of undesired disclosure of personal information

This article is written by Mr. Yusuke Koizumi , Chief Fellow, Institute for International Socio-Economic Studies(NEC Group)

A variety of privacy issues are pointed out when utilizing AI (Artificial Intelligence) and Big Data.

Among them the most essential issue is the issue involved in “Profiling”.

EU (European Union) regulated the profiling in the GDPR (General Data Protection Regulation) applied from May this year.

It is, however, noted that in the Act on the Protection of Personal Information of Japan currently effective there are no specific regulations on profiling. But “so called Profiling” was listed as one of the issues to be further discussed in the Policy Outline of the Institutional Revision for Utilization of Personal Data announced in 2014.

Firstly what is “profiling”? Profiling has been developed in the criminal investigation. Profiling is a technique to infer information about a criminal suspect such as ages, gender, profession, family composition, personal character based on situation of the scene of the crime and behavior pattern of a criminal suspect.

Profiling in AI and Big Data is something similar to this criminal profiling. It can be roughly explained that the profiling is the technique to infer the information unknown or to predict future behavior of a person based on the known information associated with a person.

Typical example might be that income, personal taste, or purchasing behavior of targeted person is inferred or predicted by profiling, for example, from person’s purchasing records.

Actually what is regulated mainly in GDPR is not Profiling itself, but decisions based solely on automated processing including profiling (to be more specific, such decisions that significantly affect data subjects). For example, automated decision in the loan examination system based on profiling such as the credit score of applicant is falling in this category of “automated decision-making”.

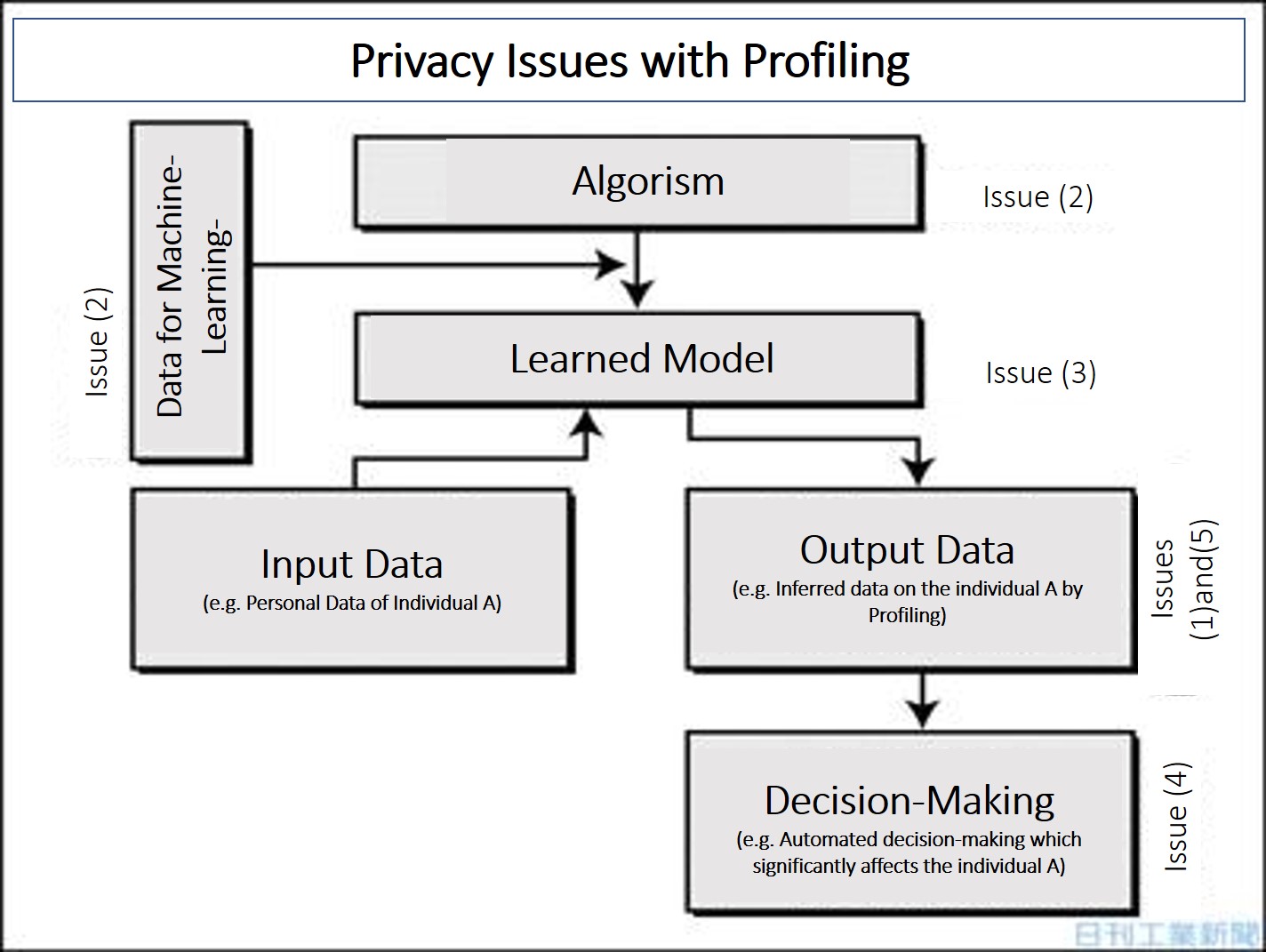

There are a variety of privacy issues involved in this profiling and automatic decision-making.

For example,

(1) Profiling may infer even such personal information as personally very sensitive or undesired to be disclosed.

If the inference accuracy is substantially improved with Big Data and AI, the inference of data such as personal taste, health condition, and annual income e.t.c. which are undesired to be disclosed becomes almost the same with the actual collection of personal data. By profiling, we can infer personal data practically same with the actual data.

One typical story is that profiling made for a teen-aged woman based on her purchasing records in the supermarket resulted in the assumption that she was pregnant with very high probability.

So, D/M related to the pregnancy are sent to her home before her family acknowledge her pregnancy.

Others are;

(2) How to prevent social discrimination from being promoted or newly made because bias is included in algorism of AI or machine learning data.

(3) How to fulfill social responsibility on the opacity of the algorism (in black box)

(4) How to deal with the trouble if the automated decision-making results in the decision disadvantageous or unfavorable to the individual (in case of employment, loan application, insurance conditions and so on)

(5) How to judge the accuracy of the predicted data when profiling deals with the future risk of an individual’s illness, disease, criminal offence and so on.